Now Reading: Tested: Running OpenAI’s ‘Open-Weight’ Model on a Laptop – Here’s Why It Falls Short

-

01

Tested: Running OpenAI’s ‘Open-Weight’ Model on a Laptop – Here’s Why It Falls Short

Tested: Running OpenAI’s ‘Open-Weight’ Model on a Laptop – Here’s Why It Falls Short

Quick Summary

- OpenAI has released “gpt-oss,” a new AI model that can be run locally on machines without an internet connection.

- gpt-oss allows developers access to “weights” for customization and fine-tuning but does not provide access to the underlying code or training data.

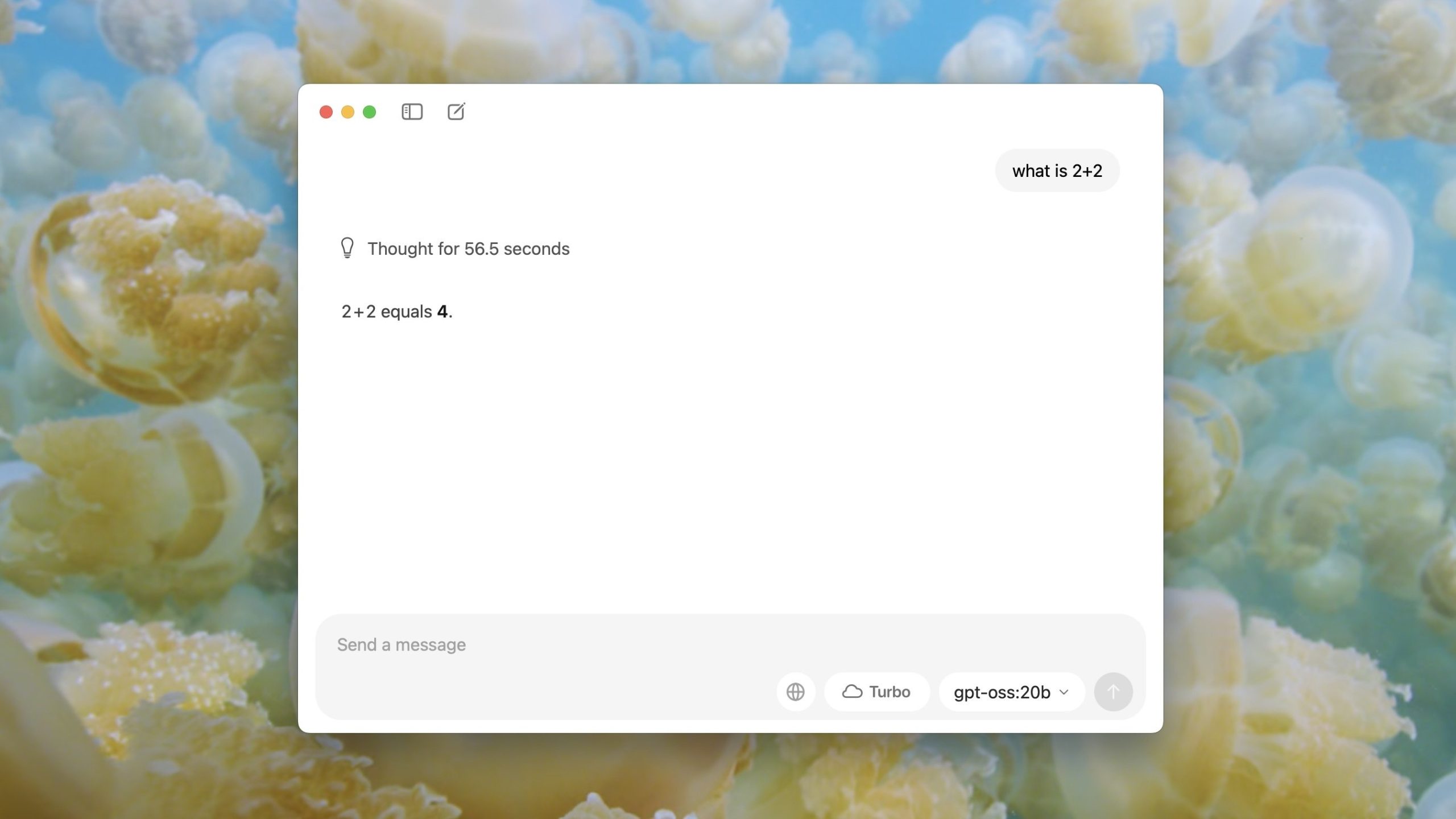

- The model is available in two versions: gpt-oss-20b (requires 16GB system memory) and gpt-oss-120b (requires 80GB system memory).

- It is compatible with Mac devices using tools like Ollama to facilitate installation and use.

– Example tests showed that performance varies significantly depending on hardware. On a MacBook Pro with an M3 Pro chip, the model performed faster than on an iMac with an M1 chip.

– Tasks such as simple arithmetic and historical queries took much longer compared to using ChatGPT directly (e.g., ~12 seconds versus near-instant responses from GPT models running online).

– The privacy advantage of local computing ensures user queries are not sent over the internet or stored by openai.

For full technical details, you can check out the original article here.

Indian Opinion Analysis

The launch of OpenAI’s “gpt-oss” highlights increasing demand for privacy in AI usage, offering users offline large language model capabilities while enabling developers further customizability. For India’s tech community, this coudl appeal to businesses prioritizing secure operations or developing localized solutions for diverse languages-an essential factor given India’s linguistic diversity.

However, practical challenges accompany its adoption. High memory requirements may deter individuals or small organizations that lack advanced hardware setups commonly needed for optimal performance. Moreover, speed limitations illustrate that such technology might presently suit niche cases rather than mass adoption within India’s burgeoning digital ecosystem.

The move aligns with growing global debates around data protection laws that India must navigate carefully as it seeks self-reliant systems while leveraging global tools responsibly. For researchers and organizations balancing innovation, cost-efficiency, and regulatory compliance locally-run AI systems like gpt-oss may represent a promising middle ground going forward.