Now Reading: Frightening new AI can recreate your face just by analyzing your DNA

-

01

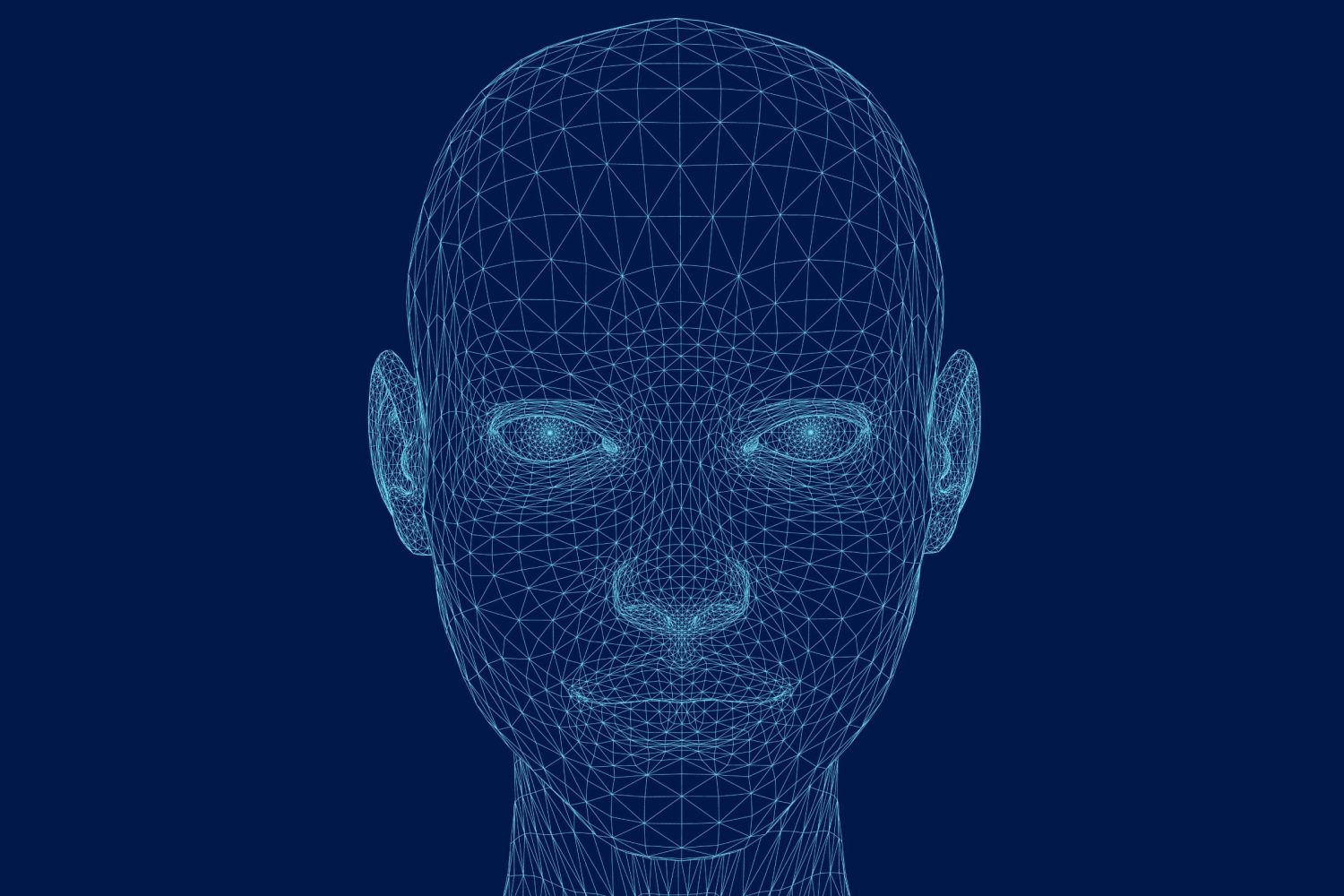

Frightening new AI can recreate your face just by analyzing your DNA

Frightening new AI can recreate your face just by analyzing your DNA

A new AI model can actually recreate your face just by analyzing a few bits of your DNA. Developed by scientists at the Chinese Academy of Sciences, Difface was trained on data from nearly 10,000 Han Chinese volunteers.

Each participant provided a full genome sequence and a high-resolution 3D scan of their face. By analyzing patterns in the DNA, particularly segments called SNPs that influence facial traits, the system learned to match genetic markers with physical features like nose shape, cheekbone structure, and jawline.

This allowed Difface to compress the genetic and facial data into a shared space. It then used an AI technique called diffusion to recreate the digital face. The results of the DNA-based AI facial recreation are surprisingly accurate, too. With just DNA input, the system’s average error was around 3.5 millimeters. When age, sex, and BMI were added, it dropped to under 3 mm.

The most obvious application use here is forensics. An AI tool that can recreate faces could be used by law enforcement to trace DNA from a crime scene and then reconstruct a suspect’s face, even with no witness or camera footage. It could be a major boon in solving crimes where no witnesses are available.

Tech. Entertainment. Science. Your inbox.

Sign up for the most interesting tech & entertainment news out there.

By signing up, I agree to the Terms of Use and have reviewed the Privacy Notice.

But AI facial recreation also raises major red flags. If a face can be recreated from what’s supposed to be “anonymous” DNA, privacy protections go out the window. And even if it was relegated to only law enforcement usage, there’s no way to lock companies out of developing their own versions beyond that.

Further, surveillance risks become real, especially in countries already using genetic databases for population monitoring. You could theoretically recreate someone’s face using the AI by inputting data from something as simple as a single hair.

Even in medicine, where the tech could help visualize genetic disorders or predict aging patterns, ethical questions loom. What if insurers or employers got access to this data? How would they use it, and who would ensure they only use it for ethical reasons?