Now Reading: Google’s brilliant new AI app might convince you to buy a more expensive phone

-

01

Google’s brilliant new AI app might convince you to buy a more expensive phone

Google’s brilliant new AI app might convince you to buy a more expensive phone

I donât know about you, but I want AI software to become so sophisticated that it can act as a personal assistant, similar to what we see in the movies. I also want a personal AI tool that can run on the device Iâm using, whether itâs an iPhone, tablet, or PC, rather than the cloud.

It will be a while before we see the fruits of their labors, but AI firms are already making progress on both fronts. Personal AI assistants capable of understanding context will make products like the ChatGPT io hardware possible. Companies like Apple will then push for private, on-device AI experiences, even though Apple Intelligence isnât a great example of AI innovation right now.

While we wait, thereâs a new Google AI app that you should know about, as it might have an impact on the next iPhone or Android device you buy.

Google AI Edge Gallery is an app you can install on your phone to run AI models locally rather than in the cloud. Itâs an amazing alternative to ChatGPT, Gemini, or Appleâs upcoming smarter Siri. The only problem is that you need powerful hardware to run it.

What is Google AI Edge Gallery?

Google quietly released the Android version of Google AI Edge Gallery on GitHub, meaning you have to sideload it on your smartphone or tablet rather than find it on the Play Store. Even though itâs Google, we typically donât recommend sideloading software to your devices. Instead, Iâd wait for Google to bring this app to its digital store and the App Store.

Then again, if you want to be at the forefront of AI innovation, which might include running advanced AI models locally, Google AI Edge Gallery is the software for you. Unfortunately, the app is only available on Android for now.

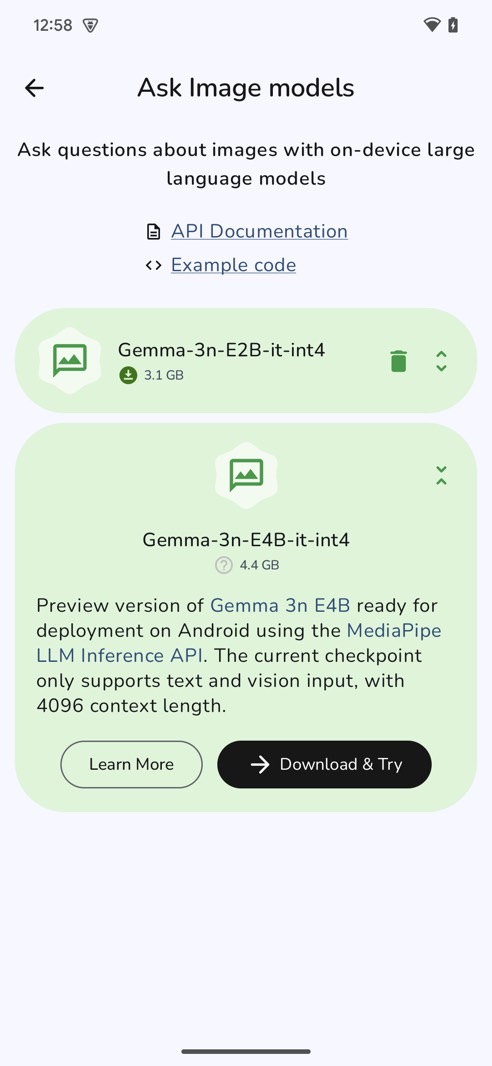

Once installed, the app gives you access to open-source AI models from Hugging Face, which you can install and run locally.

A few days ago, we talked about Googleâs Gemma 3n, a powerful AI model made for on-device AI experiences. Google AI Edge Gallery gives you access to that model.

Once installed, an AI model will use your deviceâs processing power to answer your prompts. As we saw with the Gemma 3n demo, AI models that can run on phones can help you with all sorts of advanced questions, including understanding images and solving problems.

That all happens directly on your device, which means the AI works even when an internet connection isnât present or while airplane mode is enabled.

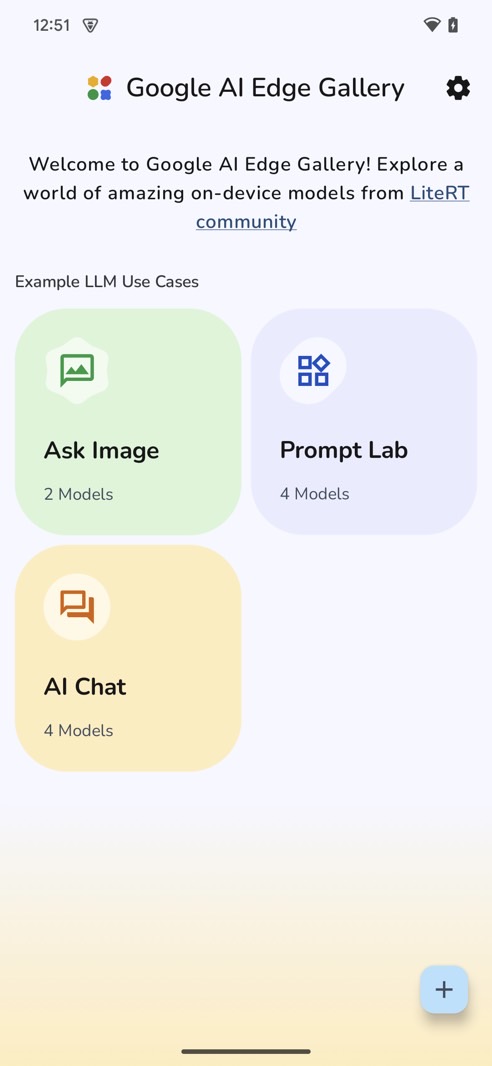

The app comes with three examples of AI use cases: Ask Image, Prompt Lab, and AI Chat. Select one of them, and youâll see which models are compatible with your device. If you donât have them installed already, you can download them.

What hardware do you need?

I said that Google AI Edge Gallery might convince you to buy a more expensive phone (or tablet) because running AI models locally will require the appropriate resources.

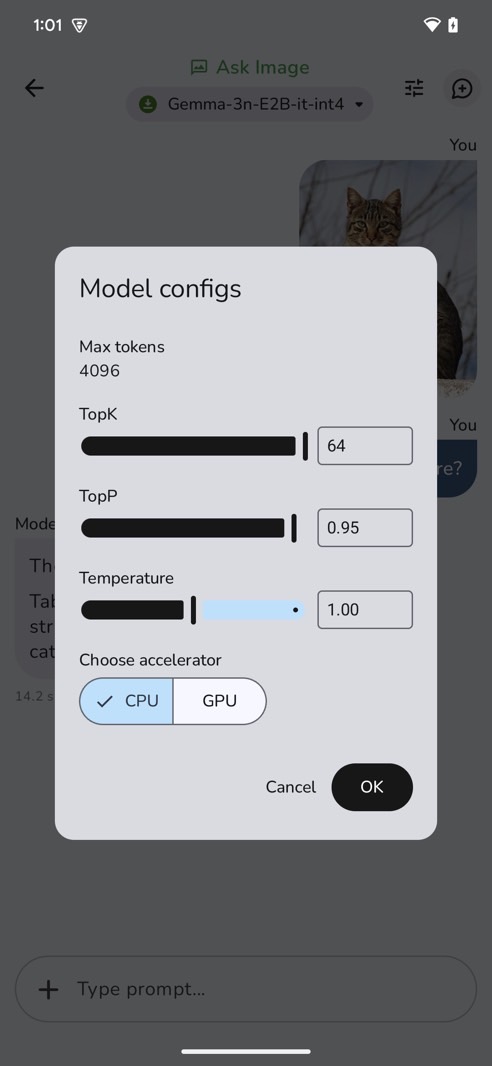

The AI models need a powerful CPU and GPU to run effectively on your device. The app even lets you decide which chip the AI will use. For example, you might want to switch processing between the CPU and GPU to improve performance.

The app also lets you keep track of your deviceâs temperature. You donât want your local AI models to overheat your phone, after all. Battery life might also suffer. The more time the AI model needs for extra processing, the more energy it consumes. Again, temperature is an important parameter for the safety of your battery.

With that in mind, youâll need a premium phone or tablet to use Google AI Edge Gallery. That might mean a device like the Galaxy S25 Ultra or the upcoming iPhone 17 Pro Max. These phones have some of the most powerful chips on the market and batteries that should be able to sustain running AI models locally for extended periods of time.

Another downside concerns the AI software itself. Even though itâs running on-device, it might not be as powerful as ChatGPT, Gemini, and other proprietary AI apps that are already available on mobile devices. Also, thereâs no guarantee that Googleâs app will let you create a personal assistant using an open-source AI model optimized for mobile phones from Hugging Face.

Still, Googleâs effort is notable here, signaling the companyâs interest in having AI models run locally. This is great news for the future of AI as the technology becomes more personal.