Now Reading: Nvidia Leads AI Inference Race, AMD Follows Closely

-

01

Nvidia Leads AI Inference Race, AMD Follows Closely

Nvidia Leads AI Inference Race, AMD Follows Closely

Quick Summary

- Nvidia’s new Blackwell GPU architecture outperformed competitors in MLPerf machine learning benchmarks, dominating AI inference.

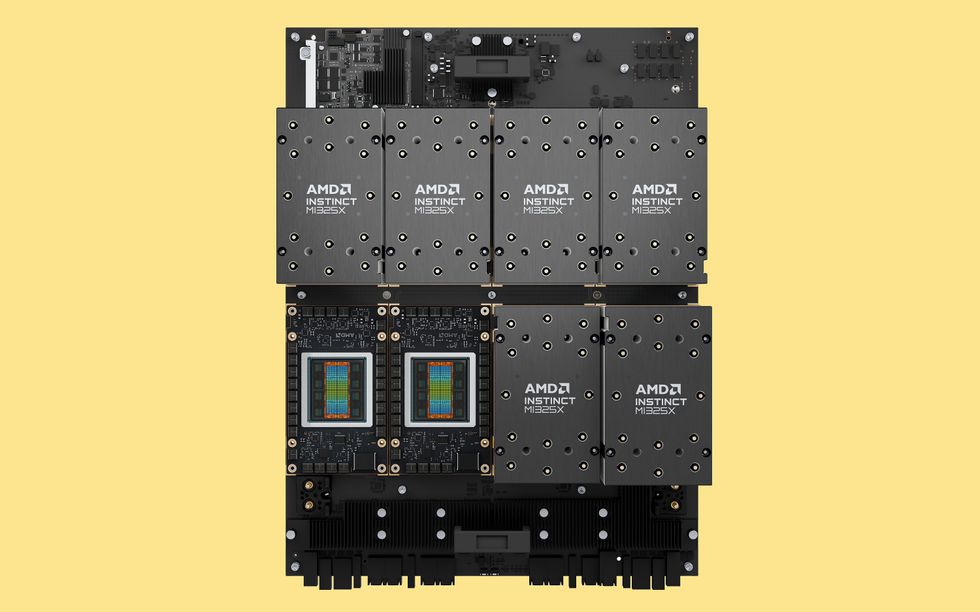

- AMD’s latest Instinct MI325X GPU matched Nvidia H200 on several tests, particularly the Llama2 70B benchmark for large language models.

- mlperf added three new benchmarks this year to better reflect rapid advancements in machine learning:

– Llama2-70B Interactive: focused on responsive chatbot performance.

– Llama3.1 405B: tested broader “agentic AI” contexts with a wide context window (128,000 tokens).

– RGAT: utilized graph attention networks for classification tasks using scientific literature datasets.

- Notable results from Nvidia Blackwell GPUs include:

– A Supermicro eight-B200 setup achieving nearly four times the speed of an eight-H200 system in some benchmarks.

– Full rack setups delivering up to 869,200 tokens per second (unverified by third parties).

- AMD optimized Instinct MI325X GPUs with increased memory and bandwidth to improve performance with larger models; it achieved within ~7% of Nvidia H200 speeds on certain tests like image generation and language model inference.

- Intel showcased improved CPU-only results with its Xeon chips but did not present competition-level AI accelerator-chip solutions like the absent Gaudi3 series.

- Google’s TPU v6e demonstrated significant improvements but performed similarly to systems equipped with Nvidia H100 GPUs.

Indian Opinion Analysis

India’s growing interest in artificial intelligence and cutting-edge technology development could see critically important takeaways from advancements showcased here. The dominance of Nvidia’s Blackwell architecture reflects a trend toward lower precision computing for faster processing-an approach India’s tech sector might adopt as it scales up high-performance computing infrastructures. Similarly, AMD’s focus on optimizing higher memory capacity GPUs aligns well with rising demands for large-scale data analysis and deep learning applications critical across industries such as healthcare or logistics within India.The inclusion of new MLPerf benchmarks underlines the importance of testing practical abilities of rapidly evolving AI models-like chatbots or agentic systems-which are increasingly relevant in offering services that require real-time responsiveness or complex reasoning capabilities. Such emphasis could guide India’s startups toward investing more strategically into developing contextual hardware and software compatible with emerging global standards.

At a national level, while cultivating domestic manufacturing capacity remains essential given potential dependencies on external suppliers like Nvidia or AMD chips, Intel’s spotlighting CPU-based alternatives may inspire cost-effective pathways for limited-resource deployments-a consideration worth exploring within India’s aspiring digitalization goals across rural areas.

By benchmarking these trends against current national priorities such as digital public goods change or advancing “Make In india,” policymakers can use insights derived here to recalibrate strategies ensuring competitiveness while fostering indigenous contributions toward cutting-edge technology ecosystems.