Now Reading: Nvidia Roadmap to Gigawatt AI Data Centers, Blackwell Ultra, Vera Rubin and LLMs for Humanoid Bots

-

01

Nvidia Roadmap to Gigawatt AI Data Centers, Blackwell Ultra, Vera Rubin and LLMs for Humanoid Bots

Nvidia Roadmap to Gigawatt AI Data Centers, Blackwell Ultra, Vera Rubin and LLMs for Humanoid Bots

At the GPU Technology Conference (GTC) 2025, NVIDIA CEO Jensen Huang was mainly about Project GR00T and AI for humanoid robots and Blackwell Ultra.

The Blackwell Ultra GPU will be in the second half of 2025. It will be an upgraded chip with more memory and better communication. It will likely be 1.5X to twice as fast for some aspects of AI training and AI inference.

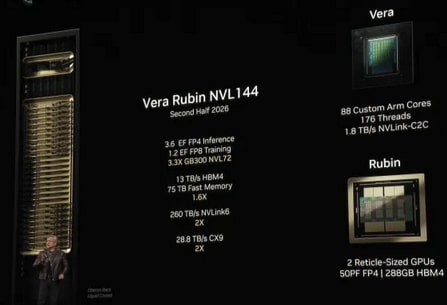

The next Nvidia chip architecture, Vera Rubin, will have full rack at 3.3x the performance of a comparable Blackwell Ultra one.

Vera Rubin and Rubin Ultra may dramatically improve on that performance when they arrive in 2026 and 2027. Rubin has 50 petaflops of FP4, up from 20 petaflops in Blackwell. Rubin Ultra will feature a chip that effectively contains two Rubin GPUs connected together, with twice the performance at 100 petaflops of FP4 and nearly quadruple the memory at 1TB.

A full NVL576 rack of Rubin Ultra claims to offer 15 exaflops of FP4 inference and 5 exaflops of FP8 training for what Nvidia says is 14x the performance of the Blackwell Ultra rack it’s shipping this year.

Nvidia says it has already shipped $11 billion worth of Blackwell revenue. The top four buyers alone have purchased 1.8 million Blackwell chips so far in 2025.

Nvidia’s next architecture after Vera Rubin, coming 2028, will be named Feynman (after Richard Feynman).

Nvidia revealed a single Ultra chip will offer the same 20 petaflops of AI performance as Blackwell, but now with 288GB of HBM3e memory rather than 192GB of the same. Meanwhile, a Blackwell Ultra DGX GB300 “Superpod” cluster will offer the same 288 CPUs, 576 GPUs and 11.5 exaflops of FP4 computing as the Blackwell version, but with 300TB of memory rather than 240TB.

NVIDIA’s Blackwell architecture specifications:

Blackwell Ultra includes the NVIDIA GB300 NVL72 rack-scale solution and the NVIDIA HGX™ B300 NVL16 system. The GB300 NVL72 delivers 1.5x more AI performance than the NVIDIA GB200 NVL72, as well as increases Blackwell’s revenue opportunity by 50x for AI factories, compared with those built with NVIDIA Hopper™.

“AI has made a giant leap — reasoning and agentic AI demand orders of magnitude more computing performance,” said Jensen Huang, founder and CEO of NVIDIA. “We designed Blackwell Ultra for this moment — it’s a single versatile platform that can easily and efficiently do pretraining, post-training and reasoning AI inference.”

The broader Blackwell architecture offers up to 30x faster real-time inference for trillion-parameter LLMs and 25x better energy efficiency compared to its predecessor, suggesting the Ultra builds on these gains.

The Blackwell Ultra will enhance with more 288 GB HBM3e memory, leveraging 12-Hi stacks to support cutting-edge AI and HPC applications. Built on the TSMC 4NP process with a dual-die design and a 10 TB/s interconnect, it inherits the architecture’s strengths—like the Transformer Engine and RAS Engine—while likely delivering superior performance for memory-heavy workloads.

NVIDIA Blackwell Ultra Enables AI Reasoning

The NVIDIA GB300 NVL72 connects 72 Blackwell Ultra GPUs and 36 Arm

Neoverse-based NVIDIA Grace™ CPUs in a rack-scale design, acting as a single massive GPU built for test-time scaling. With the NVIDIA GB300 NVL72, AI models can access the platform’s increased compute capacity to explore different solutions to problems and break down complex requests into multiple steps, resulting in higher-quality responses.

GB300 NVL72 is also expected to be available on NVIDIA DGX™ Cloud, an end-to-end, fully managed AI platform on leading clouds that optimizes performance with software, services and AI expertise for evolving workloads. NVIDIA DGX SuperPOD™ with DGX GB300 systems uses the GB300 NVL72 rack design to provide customers with a turnkey AI factory.

The NVIDIA HGX B300 NVL16 features 11x faster inference on large language models, 7x more compute and 4x larger memory compared with the Hopper generation to deliver breakthrough performance for the most complex workloads, such as AI reasoning.

In addition, the Blackwell Ultra platform is ideal for applications including:

Agentic AI, which uses sophisticated reasoning and iterative planning to autonomously solve complex, multistep problems. AI agent systems go beyond instruction-following. They can reason, plan and take actions to achieve specific goals.

Physical AI, enabling companies to generate synthetic, photorealistic videos in real time for the training of applications such as robots and autonomous vehicles at scale.

Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro are expected to deliver a wide range of servers based on Blackwell Ultra products, in addition to Aivres, ASRock Rack, ASUS, Eviden, Foxconn, GIGABYTE, Inventec, Pegatron, Quanta Cloud Technology (QCT), Wistron and Wiwynn.

Cloud service providers Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure and GPU cloud providers CoreWeave, Crusoe, Lambda, Nebius, Nscale, Yotta and YTL will be among the first to offer Blackwell Ultra-powered instances.

Project GR00T

A major focus was Project GR00T, a foundation model for humanoid robots. This ambitious initiative aims to develop robots capable of understanding natural language and emulating human movements by observing actions. Described as part of the “next wave” of AI, Project GR00T tackles some of the toughest challenges in robotics today.

Jetson Thor

Complementing Project GR00T, NVIDIA announced Jetson Thor, a new computer built specifically for humanoid robots. Equipped with generative AI (GenAI) capabilities, Jetson Thor enhances the functionality of robots, enabling more advanced and autonomous operations.

3D Blueprints, Optimization and Simulation for Next-Generation Data Centers

Using its Omniverse platform, NVIDIA unveiled a 3D blueprint for next-generation data centers. This simulation tool is designed to optimize the architecture of modern data centers, addressing critical needs like performance, energy efficiency, and scalability in the AI-driven era.

6G Research Platform

NVIDIA also revealed a 6G research platform to advance AI for radio access network (RAN) technology. This platform is poised to accelerate the development of 6G technologies, which will support future innovations such as autonomous vehicles, smart spaces, and immersive digital experiences.

Blackwell systems are ideal for running new NVIDIA Llama Nemotron Reason models and the NVIDIA AI-Q Blueprint, supported in the NVIDIA AI Enterprise software platform for production-grade AI. NVIDIA AI Enterprise includes NVIDIA NIM™ microservices, as well as AI frameworks, libraries and tools that enterprises can deploy on NVIDIA-accelerated clouds, data centers and workstations.

The Blackwell platform builds on NVIDIA’s ecosystem of powerful development tools, NVIDIA CUDA-X™ libraries, over 6 million developers and 4,000+ applications scaling performance across thousands of GPUs.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.