Now Reading: Super-Turing AI for AI Using 40 Million Times Less Energy

-

01

Super-Turing AI for AI Using 40 Million Times Less Energy

Super-Turing AI for AI Using 40 Million Times Less Energy

Super-Turing AI operates more like the human brain and with much less energy. This new AI integrates certain processes instead of separating them and then migrating huge amounts of data like current systems do.

AI data centers are consuming power in gigawatts, whereas our brain consumes 20 watts. That’s 1 billion watts compared to just 20. Data centers that are consuming this energy are not sustainable with current computing methods. So while AI’s abilities are remarkable, the hardware and power generation needed to sustain it is still needed.

Inspired by how synapses in the brain adjust as we learn, researchers developed a “synstor circuit,“ which uses a form of spike timing-dependent plasticity (STDP) — a biologically plausible learning rule — to update its internal parameters as it processes input. Unlike memristors or phase-change memories that require separate phases for learning and inference, these synstors can do both simultaneously.

The system hinges on a material marvel at the hardware level: a heterojunction composed of a WO₂.₈ layer, a thin film of ferroelectric Hf₀.₅Zr₀.₅O₂, and a silicon substrate. This stack allows for fine-tuning conductance values — akin to adjusting the strength of a synapse — with exquisite precision, repeatability, and durability.

Over 1.6 × 10¹¹ switching cycles were achieved without degradation, and conductance could be tuned across 1000 analog levels with learning accuracy down to 36 picosiemens. These updates occurred with voltage pulses as low as ±3 V and within 10 nanoseconds, making the system power-efficient and remarkably fast.

This capability allows the synstor circuit to operate in what the researchers call “Super-Turing mode“ — continuously updating its internal weights in response to environmental feedback while performing inference. If the environment changes — say, unexpected turbulence or a new obstacle appears — the circuit adapts on the fly without requiring a pause or external retraining.

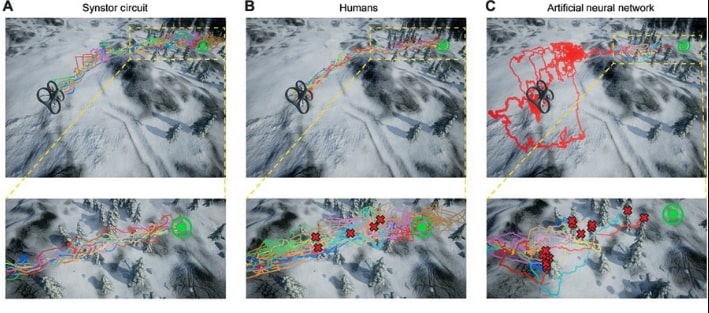

To test this new Super-Turing technology, researchers pitted their synstor-guided drone against two competitors in a simulated mountainous landscape: one controlled by a traditional computer-based ANN and another operated by humans unfamiliar with the drone system.

The results were striking. The synstor circuit guided the drone to its destination faster than the humans, with an average learning time of just 4.4 seconds, compared to 6.6 seconds for people.

Meanwhile, the ANN took more than 35 hours to achieve similar competence — and even then, it consistently failed when conditions changed.

The biggest win came in energy efficiency. The entire synstor Super-Turing system consumed only 158 nanowatts, compared to 6.3 watts used by the conventional AI running on a high-end desktop computer — a difference of over 40 million times.

The synstor’s architecture will be able to scale. The current prototype is only a modest 8×8 crossbar. It should be scaled to circuits with millions of synstors using existing nanofabrication techniques.

Why This Matters For The Future Of AI

This research could be a game-changer for the AI industry. Companies are racing to build larger and more powerful AI models, but their ability to scale is limited by hardware and energy constraints. In some cases, new AI applications require building entire new data centers, further increasing environmental and economic costs.

In the brain, the functions of learning and memory are not separated, they are integrated. Learning and memory rely on connections between neurons, called “synapses,” where signals are transmitted. Learning strengthens or weakens synaptic connections through a process called “synaptic plasticity,” forming new circuits and altering existing ones to store and retrieve information.

By contrast, in current computing systems, training (how the AI is taught) and memory (data storage) happen in two separate places within the computer hardware. Super-Turing AI is revolutionary because it bridges this efficiency gap, so the computer doesn’t have to migrate enormous amounts of data from one part of its hardware to another.

“Traditional AI models rely heavily on backpropagation — a method used to adjust neural networks during training,” Yi said. “While effective, backpropagation is not biologically plausible and is computationally intensive.

“What we did in that paper is troubleshoot the biological implausibility present in prevailing machine learning algorithms,” he said. “Our team explores mechanisms like Hebbian learning and spike-timing-dependent plasticity — processes that help neurons strengthen connections in a way that mimics how real brains learn.”

Hebbian learning principles are often summarized as “cells that fire together, wire together.” This approach aligns more closely with how neurons in the brain strengthen their connections based on activity patterns. By integrating such biologically inspired mechanisms, the team aims to develop AI systems that require less computational power without compromising performance.

In a test, a circuit using these components helped a drone navigate a complex environment — without prior training — learning and adapting on the fly. This approach was faster, more efficient and used less energy than traditional AI.

Science Advances – HfZrO-based synaptic resistor circuit for a Super-Turing intelligent system

Abstract

Computers based on the Turing model execute artificial intelligence (AI) algorithms that are either programmed by humans or derived from machine learning. These AI algorithms cannot be modified during the operation process according to environmental changes, resulting in significantly poorer adaptability to new environments, longer learning latency, and higher power consumption compared to the human brain. In contrast, neurobiological circuits can function while simultaneously adapting to changing conditions. Here, we present a brain-inspired Super-Turing AI model based on a synaptic resistor circuit, capable of concurrent real-time inference and learning. Without any prior learning, a circuit of synaptic resistors integrating ferroelectric HfZrO materials was demonstrated to navigate a drone toward a target position while avoiding obstacles in a simulated environment, exhibiting significantly superior learning speed, performance, power consumption, and adaptability compared to computer-based artificial neural networks. Synaptic resistor circuits enable efficient and adaptive Super-Turing AI systems in uncertain and dynamic real-world environments.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.